Phi-4 AI reasoning models optimize computing efficiency while rivaling larger AI architectures. Explore their design and impact.

With AI and AI-based services booming in the present world, there is a surplus of AI models that cater to various user needs. From image creation and document formatting, generation, to disease diagnosis, financial assistance, and education, the application of AI is boundless.

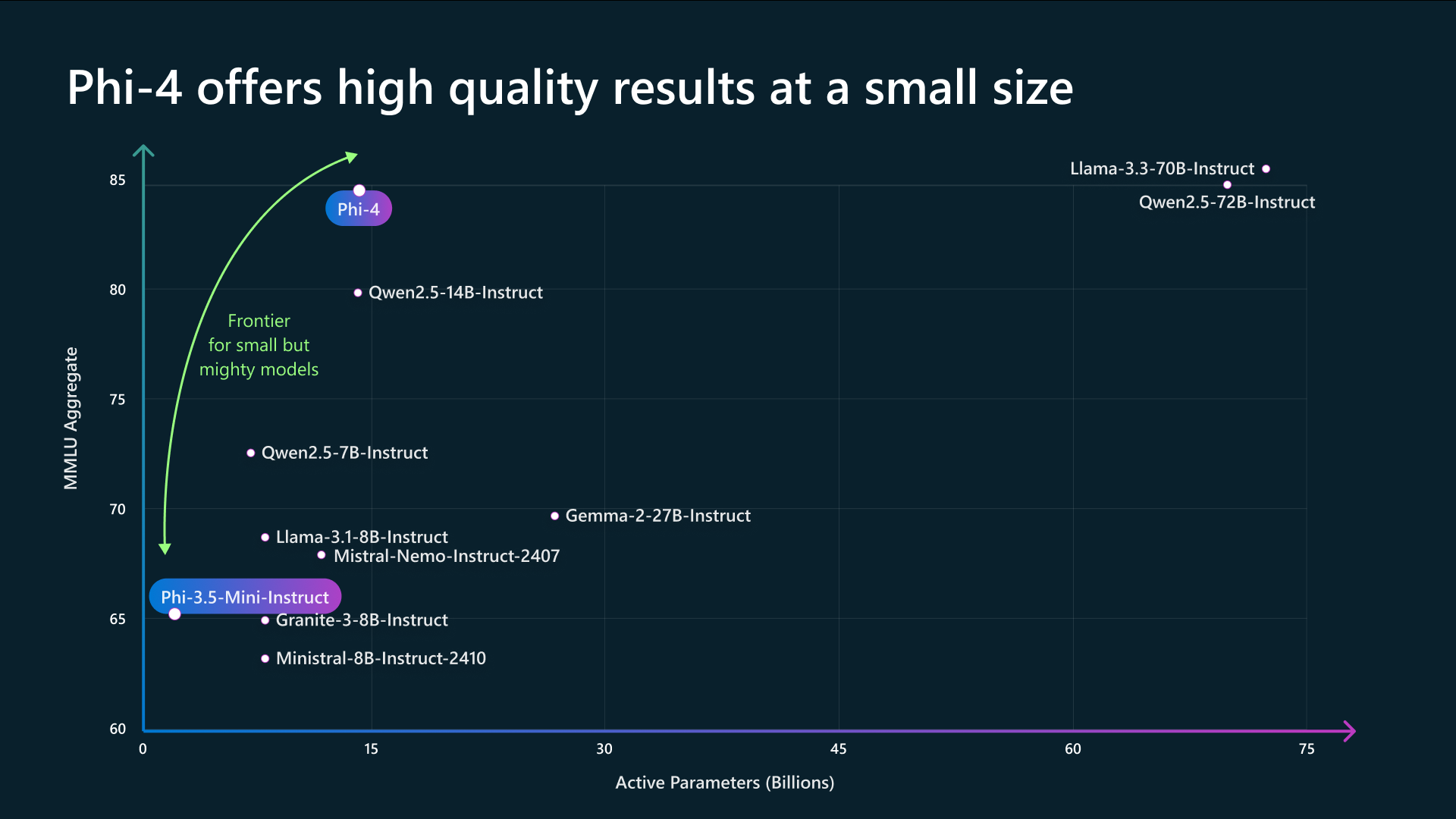

As such, Microsoft has made Phi-4 AI reasoning models available to the public, adding to the growing market for AI. These Phi-4 models are different from the standard AI models. They are more compact while retaining their high capabilities. Moreover, they are crafted for edge computing and prioritize balancing efficiency and performance. This makes them highly suitable for on-device AI processing.

Understanding Phi-4 AI Reasoning Models

As yet, Microsoft has introduced three variants: Phi-4-reasoning, Phi-4-reasoning-plus, and Phi-4-mini-reasoning. These models, first and foremost, are optimized to run with extreme efficiency on devices like Windows PC and mobile platforms.

Don’t let their compact sizes make you underestimate their capabilities. These models exhibit strong reasoning capabilities, even comparable to significantly larger models. Below, we have explained what these variants offer:

- Phi-4-reasoning (14B parameters) – This variant is trained for complex reasoning workloads. It has shown competitive results in standard benchmarks.

- Phi-4-reasoning-plus – This variant builds on the same base model, except it incorporates 1.5 times more tokens during inference. These increased tokens count yields enhanced accuracy.

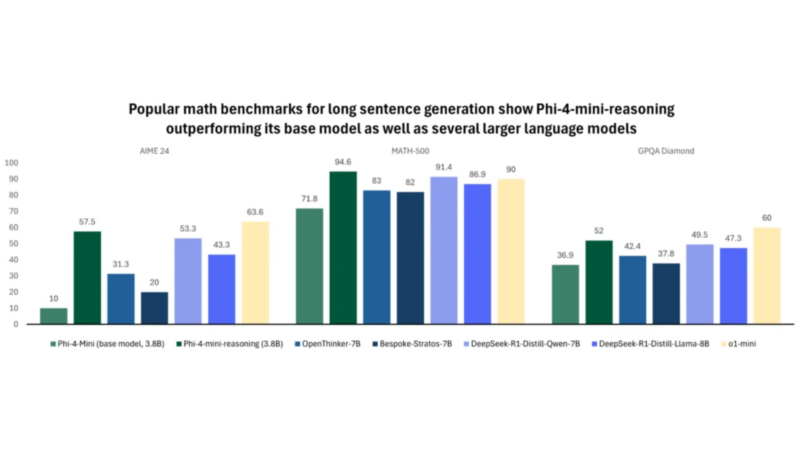

- Phi-4-mini-reasoning (3.8B parameters) – The mini variant features a reduced size. Despite a more compact size, it achieves near parity with models that are significantly larger than itself.

A key advantage that Phi-4 reasoning models have over other AI models is their compatibility with Windows Copilot+ PCs. Using this compatibility, they can leverage the built-in Neural Processing Unit (NPU) for localized AI processing. Thus, you can expect more responsive AI-driven applications, especially in scenarios where cloud-based inference is impractical.

Performance Benchmarks and Comparisons

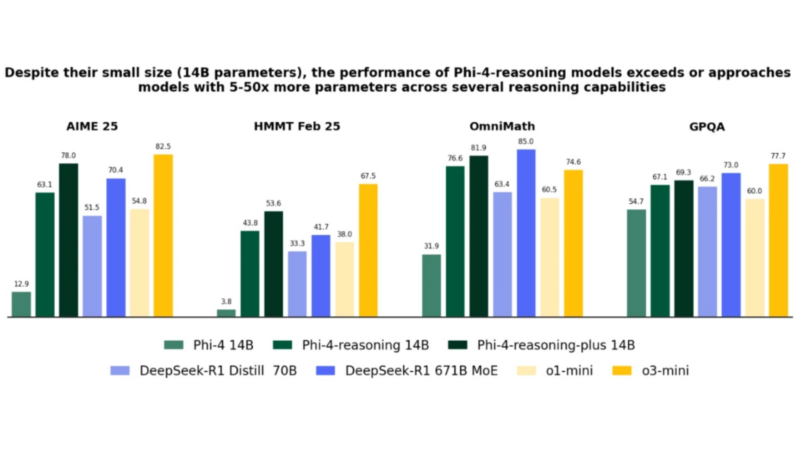

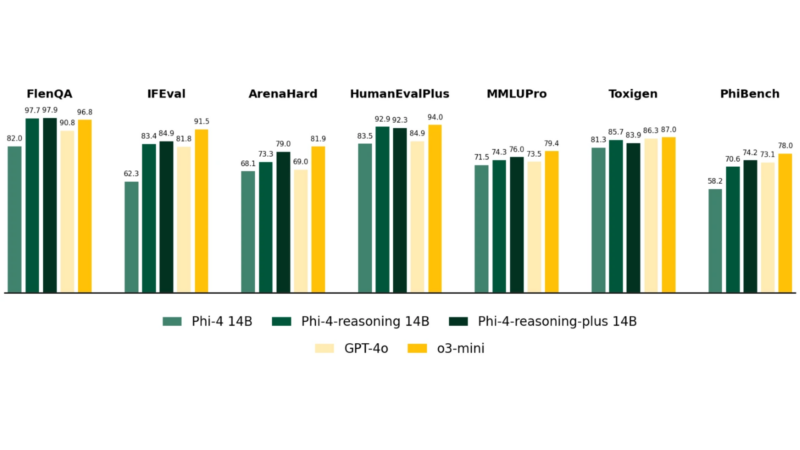

According to benchmark comparisons like GPQA, OmniMath, AIME 25, and HMMT, Phi-4-reasoning AI models are able to compete effectively with giant names in the AI industry like DeepSeek R1 (671B) and o3-mini.

Although the Phi-4 models do not surpass these larger AI architectures, it is still able to closely rival them. This rivalry showcases Microsoft’s advancements in data optimization and curation.

Phi-4 AI Reasoning Models Training Methodology

As per Microsoft, they have adopted a meticulous approach towards data curation, as well as synthetic dataset utilization. This approach allows Phi-4 models to compete with larger ones. These SLMs are fine-tuned using structured reasoning demonstrations from OpenAI’s o3-mini.

Furthermore, the mini variant employs synthetic data generated by DeepSeek-R1. Using these techniques, Microsoft can offer compact SLMs that deliver strong reasoning performance without the need for expansive model sizes.

Looking For More Related to Tech?

We provide the latest news and “How To’s” for Tech content. Meanwhile, you can check out the following articles related to PC GPUs, CPU and GPU comparisons, mobile phones, and more:

- 5 Best Air Coolers for CPUs in 2025

- ASUS TUF Gaming F16 Release Date, Specifications, Price, and More

- iPhone 16e vs iPhone SE (3rd Gen): Which One To Buy in 2025?

- Powerbeats Pro 2 vs AirPods Pro 2: Which One To Get in 2025

- RTX 5070 Ti vs. RTX 4070 Super: Specs, Price and More Compared

- Windows 11: How To Disable Lock Screen Widgets

Reddit

Reddit

Email

Email