AI Ray Reconstruction fixes noisy ray tracing with better visuals and smoother frames. Here’s how it works and why it matters.

Ray tracing tries to copy how light behaves in the real world. It tracks the way light reflects off metal, bounces around corners, or fades through glass. That’s what gives shadows and reflections in some games their realistic look. However, doing all that requires significant computing power. If the system doesn’t simulate enough rays, the image appears rough or contains static. The only way to fix it is to trace more rays, which demands a lot from the GPU and can slow things down quickly.

This simulation turns out to be very heavy and intensely taxing on the hardware resources. AI Ray Reconstruction addresses this. It uses trained neural networks to fill in missing lighting information, making scenes look polished without sacrificing frame rates. This makes ray tracing more affordable, with more gamers able to enjoy their games with high-quality visuals even with mid-range hardware.

What Is AI Ray Reconstruction?

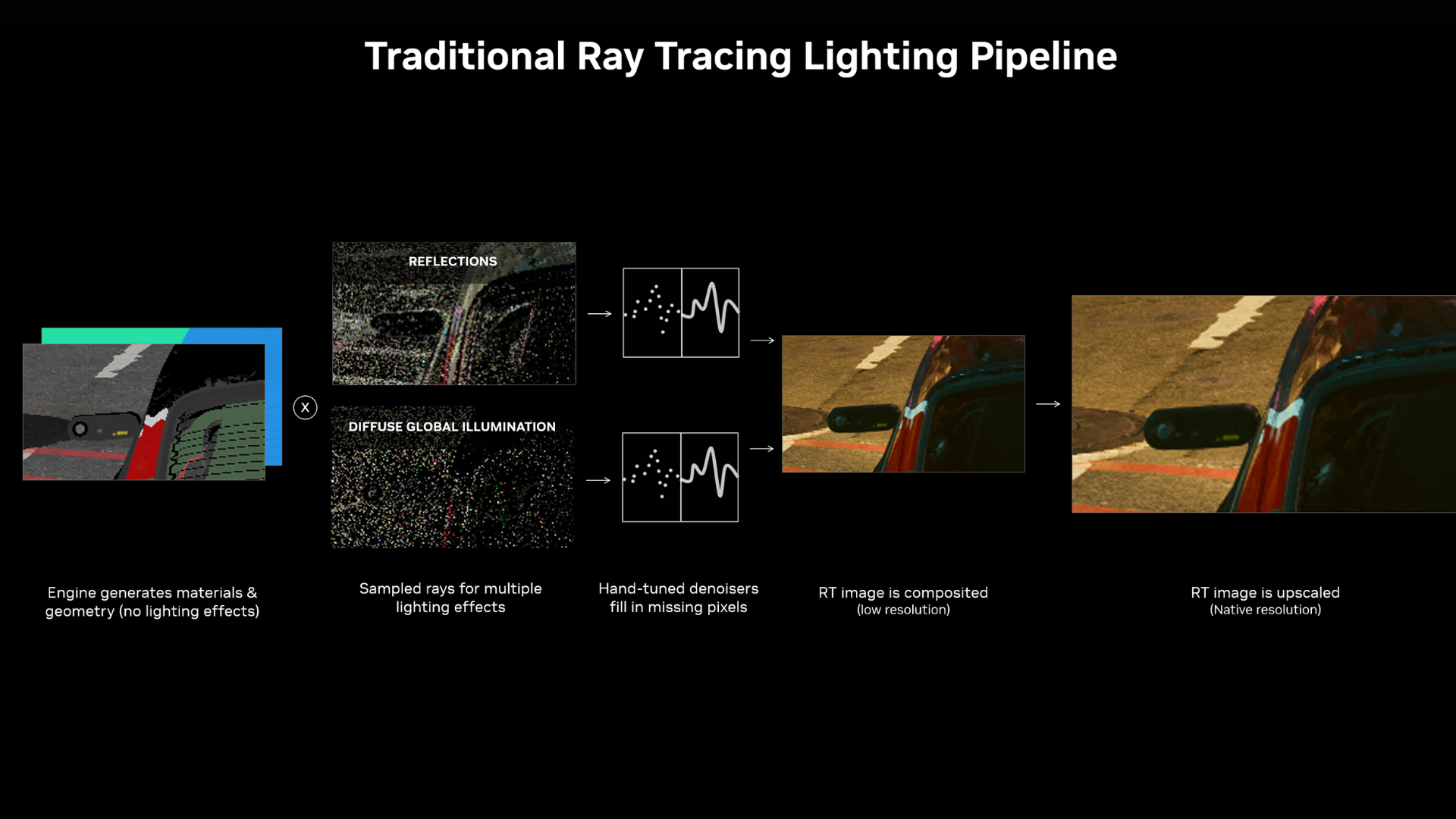

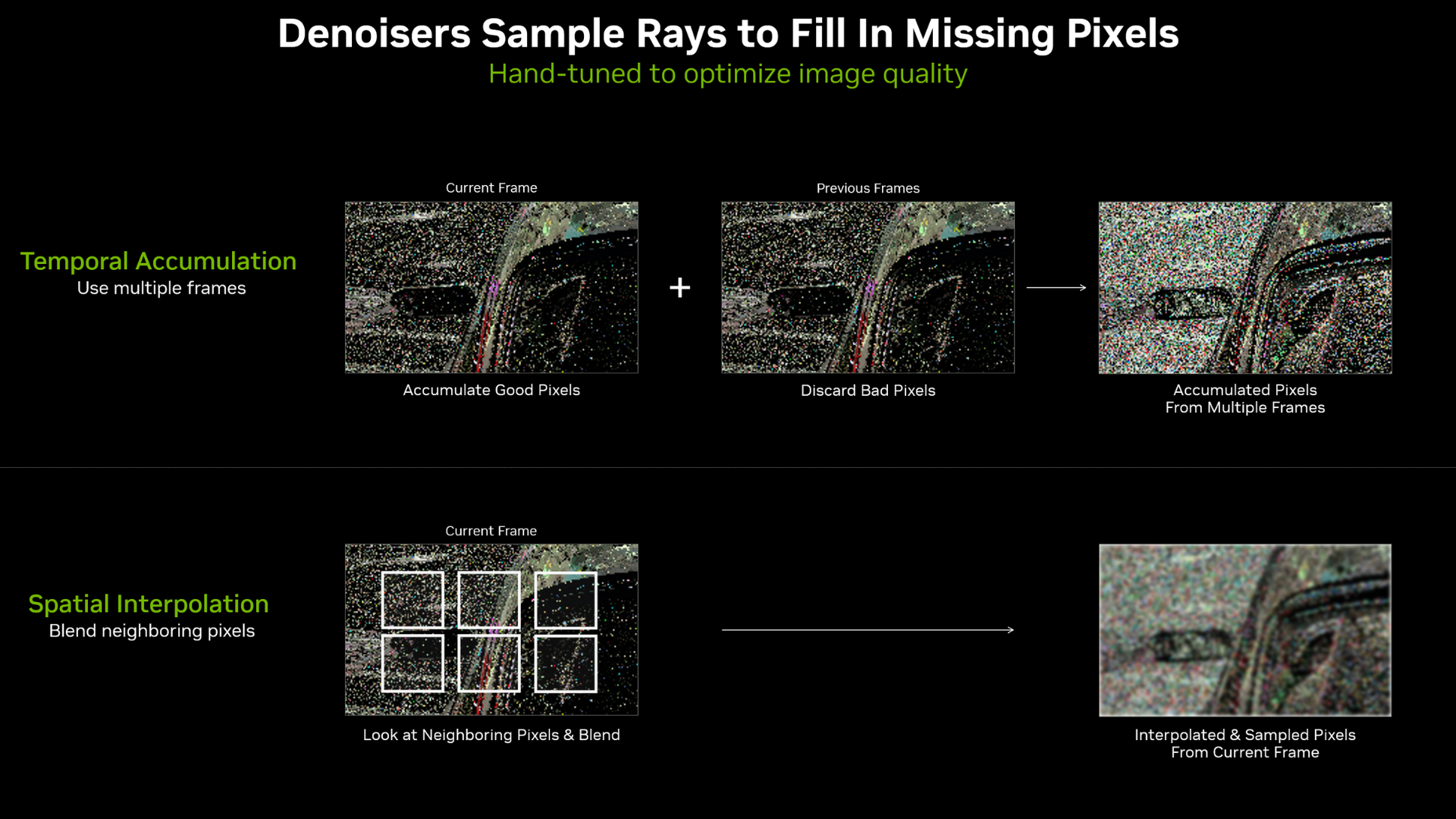

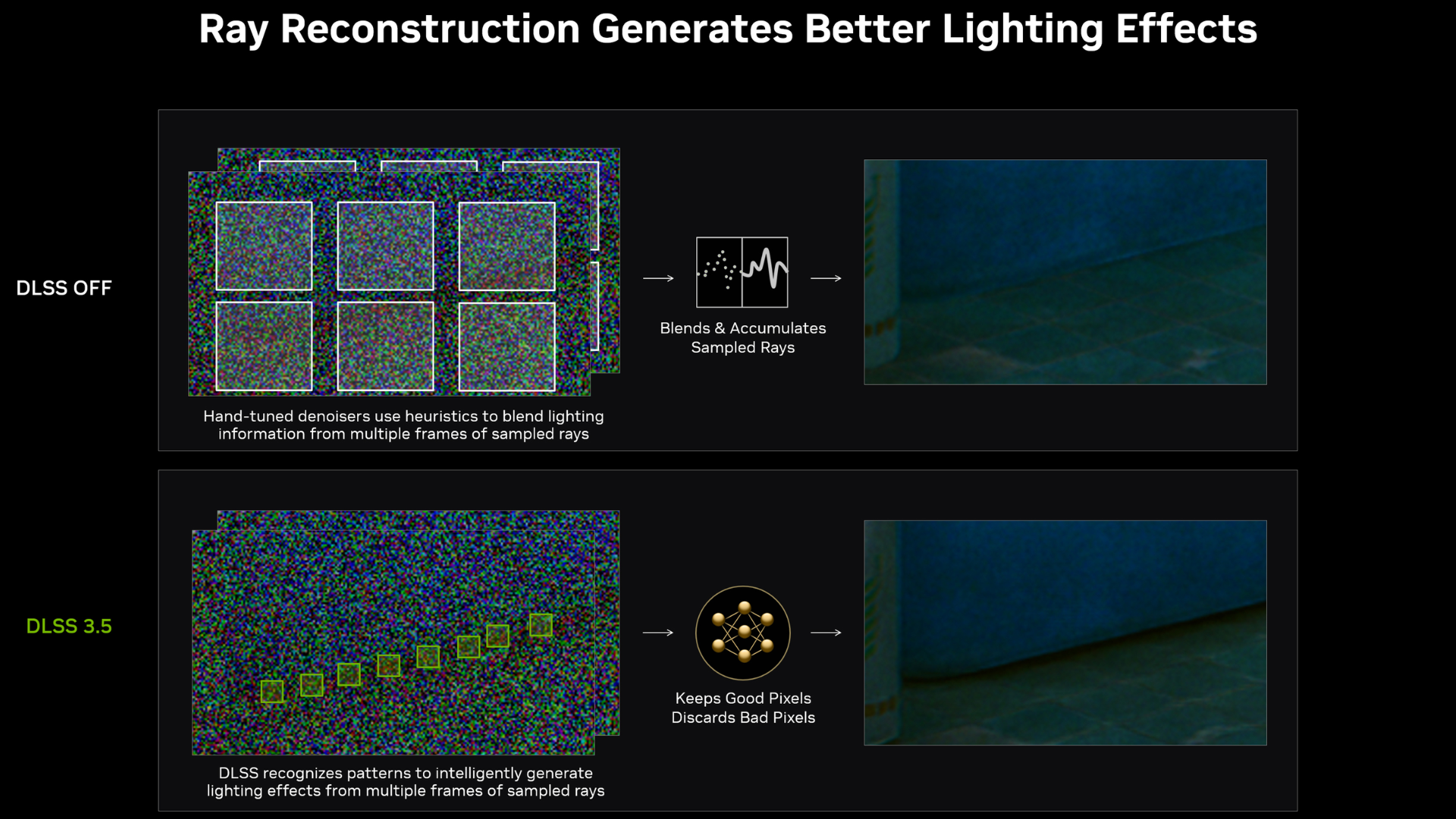

At its core, AI Ray Reconstruction is a script built on machine learning that cleans up ray-traced images in real time. Traditional rendering uses manual denoisers to smooth noise, but these filters often blur important details or fail in complex lighting. AI Ray Reconstruction instead employs neural networks trained on large sets of ray-traced data to “understand” how light behaves. This allows it to restore reflections, ambient shadows, and indirect lighting with better consistency and fewer errors.

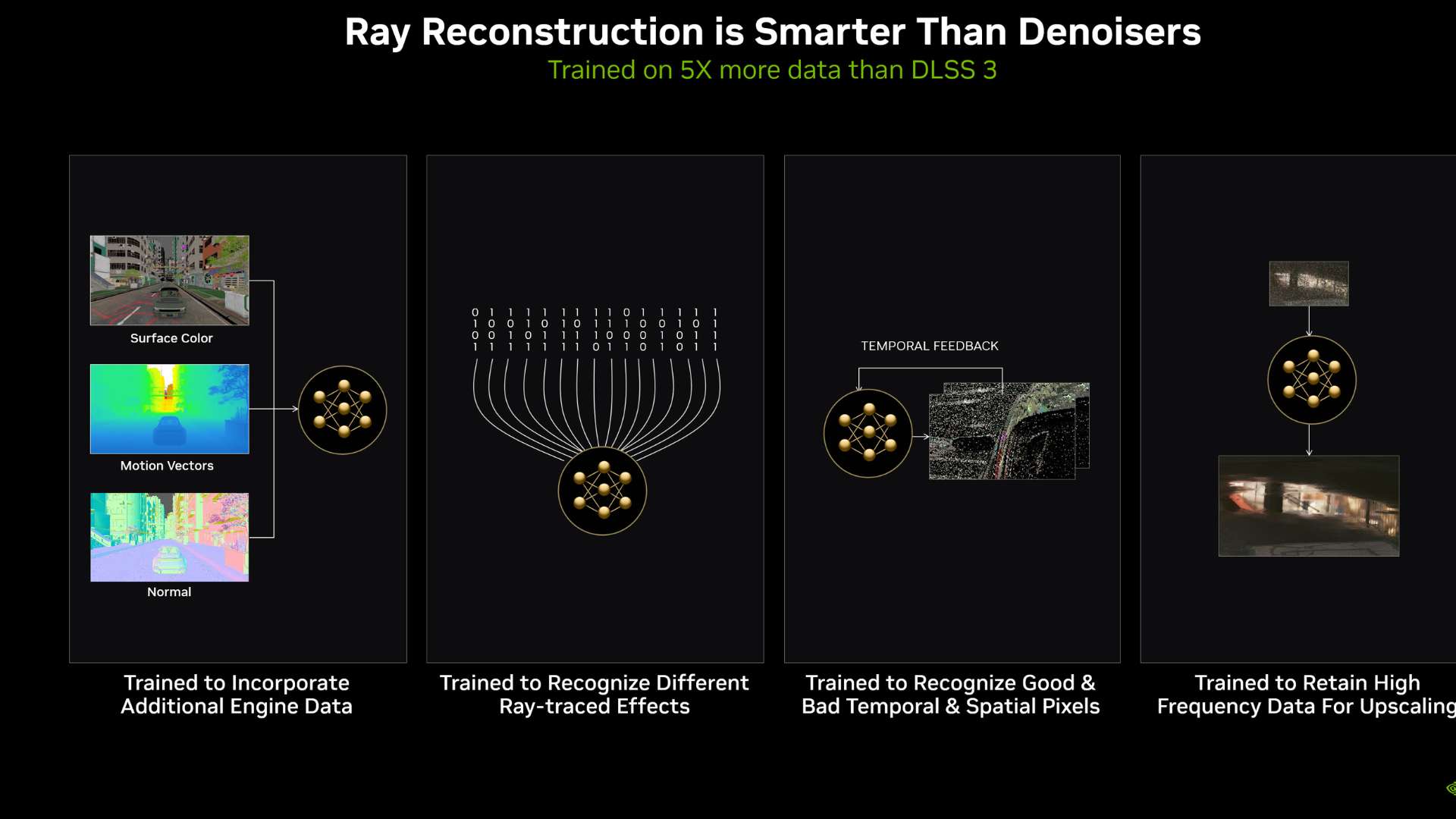

NVIDIA introduced this feature in DLSS 3.5, replacing multiple traditional denoisers with one AI model. The network, trained on up to five times more data than earlier versions, reconstructs missing ray information and upscales images in a single pass.

How the System Works

Rendering pipelines first produce a noisy frame by casting a limited number of rays per pixel. This raw output, along with motion vectors, depth, surface normals, and other metadata, is sent into the AI system. The neural network uses spatial and temporal context to rebuild lighting and shade details. By studying a wide range of visual examples, the system starts to understand how different lighting effects should look in motion. It doesn’t rely on multiple filters to handle each detail. Instead, it cleans up noise, sharpens the image, and adjusts resolution all at once. This method reduces the usual strain on the GPU and leads to visuals that stay clear and steady as scenes change.

Visual Benefits and Performance

Visual differences are easy to see. Reflections become sharper, shadows smoother, and noisy or flickering textures are almost nonexistent. In titles like Cyberpunk 2077 and Portal with RTX, DLSS 3.5’s Ray Reconstruction delivers noticeably cleaner effects compared to standard denoisers. Since fewer rays are needed and all the processes in the pipeline are handled by only one AI pass, the overall resources being used are lower.

This feature arrived in Cyberpunk 2077’s Phantom Liberty and has since come to Alan Wake 2, Portal with RTX, Black Myth: Wukong, and Naraka: Bladepoint. Alan Wake 2 uses full path tracing in darker scenes, and AI Ray Reconstruction enhances its ambient lighting on RTX cards. Portal with RTX also offers a clear before‑and‑after comparison showing dramatic improvements.

Why AI Ray Reconstruction Matters?

For gamers, this translates to cinematic lighting effects on more mid-range systems. Mid‑range GPUs that struggled to maintain ray tracing at playable frame rates now deliver stable, realistic visuals. That makes immersive lighting a feature, not a gimmick.

Developers also benefit. They no longer need to set up multiple manual denoisers for different lighting elements. Instead, they can rely on a unified AI model that adapts to varying scenes. That saves time and leads to consistent visual results across projects. Overall, AI Ray Reconstruction is making ray tracing more practical. It reduces the hardware threshold while enhancing day‑to‑day gameplay visuals.

What Comes Next

NVIDIA took this further with DLSS 4, launched in early 2025 with the RTX 50 series. It uses transformer‑based models for sharper details, reduced memory usage, and improved temporal stability. Indiana Jones and the Great Circle is among the early games adding DLSS 4 Ray Reconstruction via driver updates.

Meanwhile, AMD and Sony are experimenting with AI-based denoising. Their joint Project Amethyst aims to bring neural-network based graphics improvements to future PlayStation platforms and PC environments. That move could bring similar benefits to a broader audience and possibly extend AI reconstruction to consoles.

We provide the latest news and “How To’s” for Tech content. Meanwhile, you can check out the following articles related to PC GPUs, CPU and GPU comparisons, mobile phones, and more:

- 5 Best Air Coolers for CPUs in 2025

- ASUS TUF Gaming F16 Release Date, Specifications, Price, and More

- iPhone 16e vs iPhone SE (3rd Gen): Which One To Buy in 2025?

- Powerbeats Pro 2 vs AirPods Pro 2: Which One To Get in 2025

- RTX 5070 Ti vs. RTX 4070 Super: Specs, Price and More Compared

- Windows 11: How To Disable Lock Screen Widgets

Reddit

Reddit

Email

Email