Wondering if you can run ChatGPT or Gemini offline on your PC? Here’s what works, what doesn’t, and the best local AI tools to try now.

The idea of running ChatGPT or Google Gemini straight from your own gaming PC is slowly getting popular. With modern GPUs and powerful CPUs, today’s gaming rigs seem more than capable of handling large language models. It’s a fair assumption, after all; if your machine can handle Cyberpunk 2077 at max settings, why not a simple AI chatbot? But there’s more to the story than just raw performance.

Most people asking this question are hoping to avoid the cloud entirely. They want offline AI for privacy, lower latency, or to explore how far their machine can go. So, to answer the question if can you run ChatGPT or Gemini natively on your PC: not exactly, but you can get close with the right setup.

What It Means To Run ChatGPT or Gemini Natively

Running ChatGPT or Gemini natively means loading the full model and handling all processing on your own PC with no access to the internet, no APIs, just local resources doing the work. This would mean full model weights, inference engines, and the capability to interact with them offline. Right now, OpenAI does not offer any downloadable version of GPT-4, and Google has not released Gemini model weights publicly. That means you cannot run the actual ChatGPT or Gemini models completely offline. But that doesn’t mean you’re out of options.

Alternatives That Let You Run ChatGPT or Gemini Natively

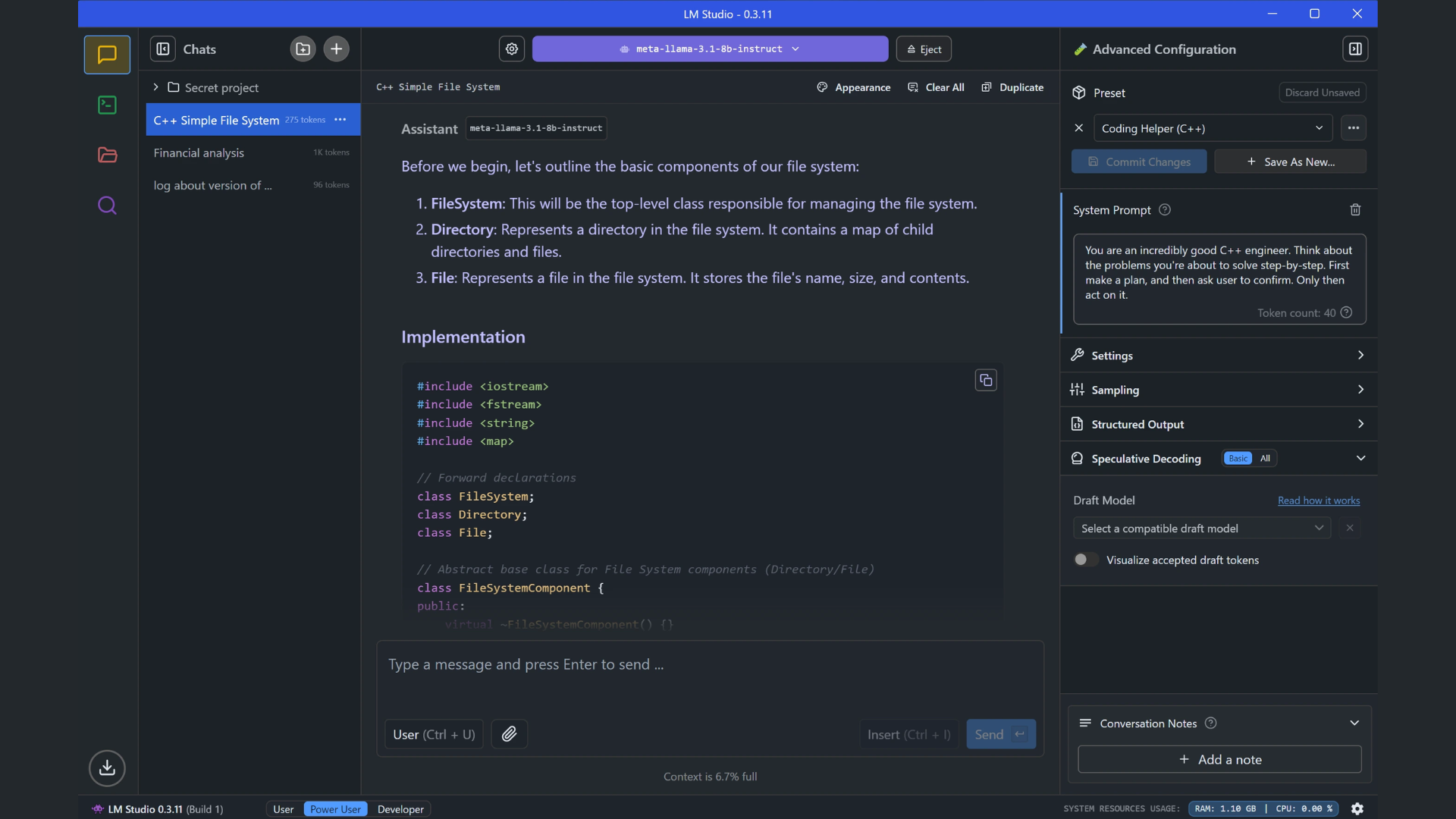

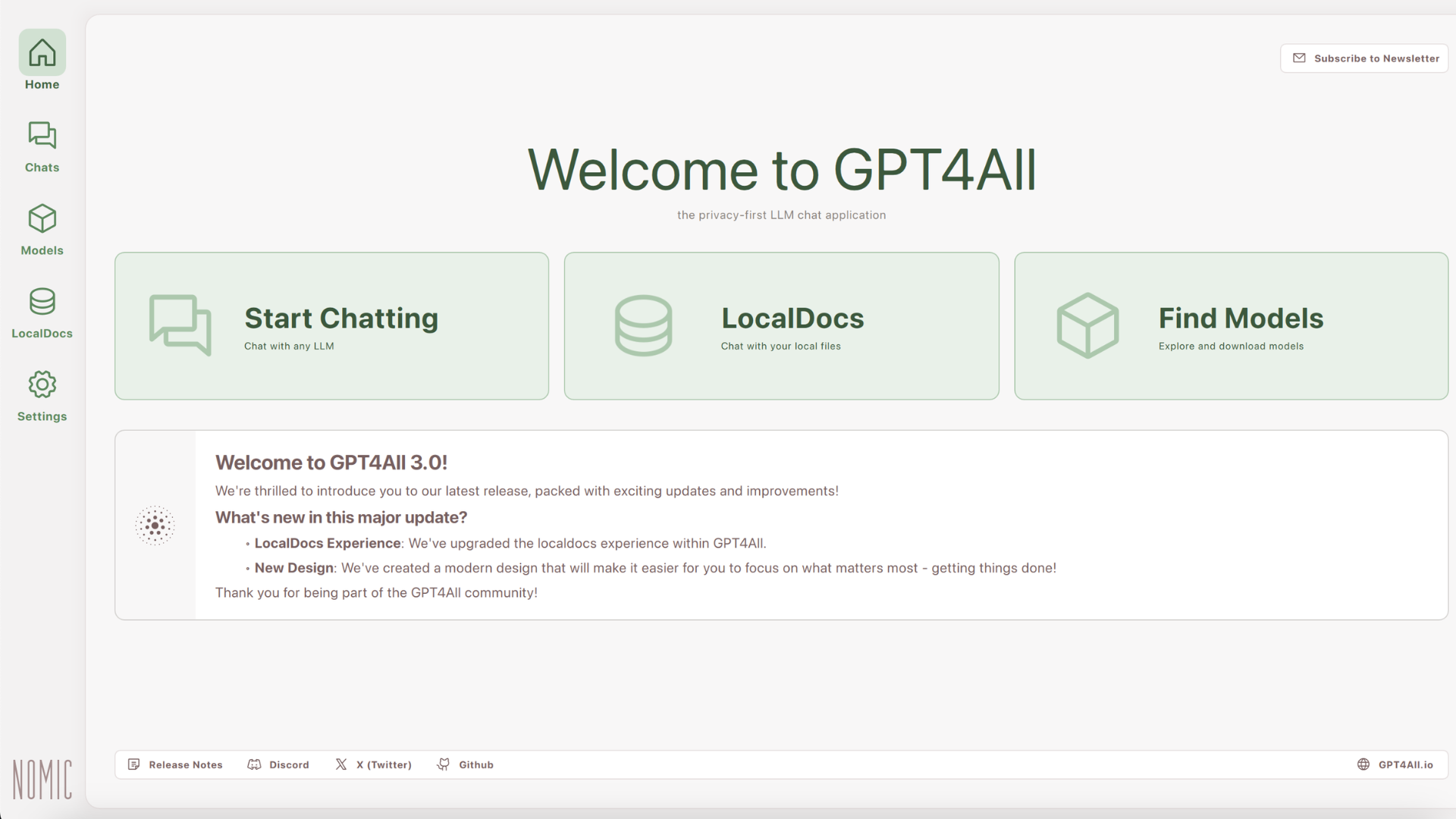

While the official models are locked to the cloud, several open-source alternatives let you replicate the experience. Tools like GPT4All, LM Studio, and LocalAI allow users to run smaller, open models on Windows or Linux. These models mimic the capabilities of ChatGPT without calling home to OpenAI servers.

Similarly, for those interested in the Gemini experience, Gemini CLI is an unofficial command-line tool that interacts with Google’s API. Though it still requires internet access, it gives you a Gemini-like environment on your PC. If your goal is to explore conversational AI on your own hardware, these tools are your best bet.

Hardware Requirements to Run ChatGPT or Gemini Natively

To run local models that behave like ChatGPT or Gemini, your PC needs decent hardware. Think of at least 16 GB of RAM, a modern multi-core processor, and ideally, a GPU with 8 GB or more of VRAM. If you’re using tools like GPT4All with a 7B model, a mid-range gaming rig with an RTX 3060 or better can handle it. For even larger models, such as LLaMA-2-13B, a GPU like the RTX 4090 makes a big difference. SSD storage speeds also help with model loading times. You don’t need a data center, but your system should be solid.

Running Gemini Natively via Browser or Scripts

You can get a taste of running Gemini natively by using Gemini Nano in your browser. This lightweight version of Google’s AI can run client-side in Chrome without constant calls to cloud APIs. It’s limited in scope but works well for casual use cases. For something more flexible, Gemini CLI allows local scripting using the Gemini API. It’s not technically offline, but it integrates smoothly with terminal workflows and development environments. This is handy if you’re testing or automating tasks on a dev machine.

There’s growing interest in local AI tooling. AMD’s new Gaia initiative, for example, is an open-source framework for running LLMs on Ryzen-powered PCs. It supports retrieval-augmented generation (RAG) and is designed for Windows users. Combined with tools like Ollama, which lets you run open models via a simple UI, local inference is becoming more accessible. These platforms are still maturing but are moving fast. Within a year or two, running GPT-level AI natively may be as simple as installing a browser.

Can You Fully Replace Cloud AI Yet?

In short, not yet. While you can get surprisingly close with the right mix of hardware and tools, the official ChatGPT and Gemini models are still out of reach for local use. You’re trading convenience and accuracy for control and privacy. Open-source models like Mistral, LLaMA 2, and Vicuna can do a lot, but they don’t yet match GPT-4’s level across all benchmarks. Still, for hobbyists, developers, and anyone curious, the local options are more than capable of handling day-to-day tasks.

Can You Run ChatGPT or Gemini Natively?

If your definition of running ChatGPT or Gemini natively means operating the exact models offline with no dependencies, the answer is no. But if you’re okay with approximations and community-built tools, there are several impressive ways to achieve a similar experience. With tools like GPT4All, LM Studio, and Gemini CLI, your gaming PC can do far more than just run games. It can host AI models, process prompts locally, and even operate within secure environments. And as local AI tooling keeps improving, true offline LLMs might not be too far away.

We provide the latest news and “How To’s” for Tech content. Meanwhile, you can check out the following articles related to PC GPUs, CPU and GPU comparisons, mobile phones, and more:

- 5 Best Air Coolers for CPUs in 2025

- ASUS TUF Gaming F16 Release Date, Specifications, Price, and More

- iPhone 16e vs iPhone SE (3rd Gen): Which One To Buy in 2025?

- Powerbeats Pro 2 vs AirPods Pro 2: Which One To Get in 2025

- RTX 5070 Ti vs. RTX 4070 Super: Specs, Price and More Compared

- Windows 11: How To Disable Lock Screen Widgets

Reddit

Reddit

Email

Email